TSMC Is the Real Bottleneck, Data Centers Bypass the Grid, & DeepSeek's Training Breakthrough

TSMC admits silicon is the bottleneck, behind-the-meter generation becomes table stakes, and DeepSeek publishes the framework for R2

TSMC Admits It Underinvested and Now Everyone Pays the Price

TSMC reported blockbuster earnings, but the most important part of the earnings call was CEO C.C. Wei admitting that silicon from TSMC is the bottleneck limiting AI growth.

Wei said one of his customers’ customers told him directly: “Silicon from TSMC is a bottleneck, and asked me not to pay attention to all others, because they have to solve the silicon bottleneck first.”

The company is responding with record capital expenditure of $52 to $56 billion this year, up 27% to 37% from last year. But TSMC’s revenue has grown 50% since 2022, while capex only grew 10% over that period. They underinvested, and are playing catch-up.

And that catch-up will take years. Wei was blunt: “If you build a new fab, it takes two to three years. So even we start to spend $52 billion to $56 billion, the contribution to this year is almost none, and 2027, a little bit. We are looking for 2028-2029 supply.”

This puts AI infrastruct

ure plans in a bind. Stargate, xAI’s Colossus, Meta’s data centers, all of it depends on chips that TSMC cannot supply fast enough. The hyperscalers are losing money now because they cannot get enough silicon. Wei admitted as much: “Our headache right now is the demand and supply gap we need to work hard to narrow.”

Wei also revealed his nervousness about making the wrong bet. “You essentially try to ask whether the AI demand is real or not. I’m also very nervous about it. If we did not do it carefully, that will be a big disaster to TSMC for sure.” He spent months talking to cloud providers to verify demand. They showed him evidence that AI is driving real business growth and healthy financial returns. He believes the AI megatrend is real.

But that fear of overinvesting is exactly the problem. TSMC is a near-monopoly on leading-edge chips. They have little to lose by being cautious, but are holding the bag if they overbuild and demand disappears.

Wei was asked about Intel directly and dismissed the threat: “In these days, it’s not money to help you to compete.” Intel and Samsung are years behind on leading-edge nodes. Wei knows it. Just look at their relative capex spend.

If TSMC faced credible competition, the calculus would be different. A competitor catching up would mean lost business, not just lost upside. TSMC would have been forced to invest more aggressively years ago to defend market share. Instead, they played it safe, and now the entire industry is paying for that decision with a multi-year chip shortage.

And it’s hard seeing that underinvestment change until Intel and Samsung become credible alternatives. TSMC worries more about excess capacity than losing customers, and only competition (or a government backstop so they don’t hold the bag) will flip that.

Otherwise, the pace of the AI buildout depends on whether C.C. Wei guessed right about demand three years from now. The entire industry is betting on one company’s capex decisions.

Stargate Turns One, and AI Data Centers Are Buying Jet Engines

One year ago today, President Trump stood alongside Sam Altman and Masayoshi Son to announce Stargate, the $500 billion AI infrastructure project. This week, OpenAI announced community energy commitments for Stargate sites, promising to fund grid upgrades and not raise local electricity prices.

But the more interesting story is what AI data centers have already been doing to solve the power problem: bypassing the grid entirely.

Elon Musk showed the industry how fast you could move. In 2024, xAI built Colossus in Memphis in 122 days. The local utility could only provide 50 megawatts, but xAI needed triple that. So Musk brought in 35 methane gas turbines with 421 megawatts of combined capacity.

Now everyone is following his playbook. OpenAI ordered 29 gas turbines capable of producing 34 megawatts each for Stargate’s Abilene site, totaling 986 megawatts. That is enough to run up to half a million GB200 NVL72 chips. Meta’s Hyperion project in Louisiana is using three H-class natural gas turbines for a facility that will eventually scale to 5 gigawatts.

In Texas alone, tens of gigawatts of data center load requests pour in each month, but barely more than a gigawatt has been approved in the past year. SemiAnalysis estimates that 27% of data center facilities will be fully powered by onsite generation by 2030, up from basically nothing a few years ago. Natural Gas Intel projects 35 gigawatts of behind-the-meter data center power by 2030.

But all the investment created a new problem: turbine shortages. Order a GE Vernova LM6000 today and the wait is three to five years. Siemens has similar backlogs. So data centers are getting creative.

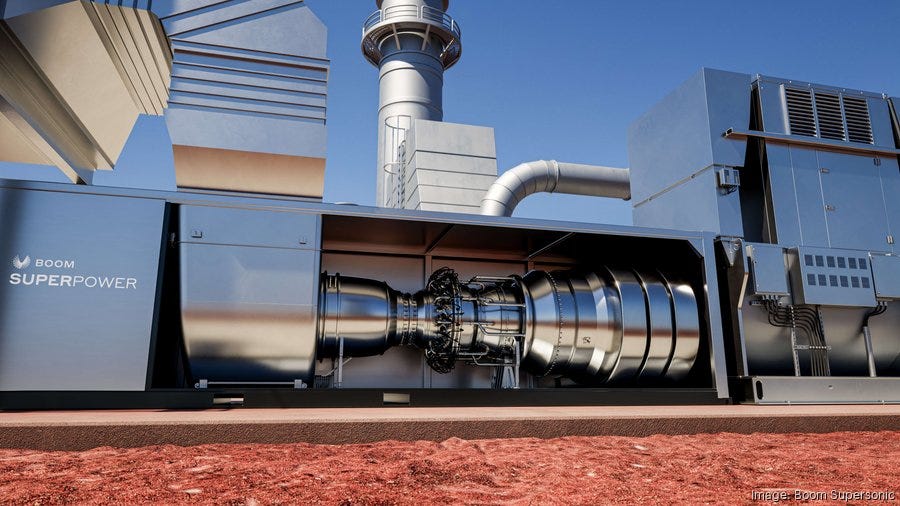

Boom Supersonic raised $300 million to build natural gas turbines for AI data centers. Their Superpower turbine generates 42 megawatts and fits in a shipping container. It uses the same core technology as their Symphony supersonic jet engine, with roughly 80% shared hardware. Crusoe ordered 29 units for $1.25 billion to generate 1.21 gigawatts.

Sam Altman was an early Boom investor. According to Boom CEO Blake Scholl, both Altman and Crusoe co-founder Cully Cavness called him and said: “Blake, we need you to re-sequence the product line, to do the power turbine first and the airplane second.”

This is the state of AI infrastructure in 2026. The companies building the future of AI cannot wait for the grid. They are buying turbines, repurposing jet engines, and generating their own power. OpenAI’s community energy commitments are smart politics, but behind-the-meter generation has become table stakes for anyone serious about speed.

DeepSeek’s mHC Paper Could Power R2

DeepSeek kicked off 2026 with a technical paper that solves a fundamental problem in scaling large language models. The paper, co-authored by founder Liang Wenfeng and 19 researchers, introduces Manifold-Constrained Hyper-Connections, or mHC.

As AI models get bigger, they become unstable during training. Information flowing through the network can amplify out of control. DeepSeek found that in a 27 billion parameter model, signals were amplifying by 3000x, causing training to collapse entirely.

mHC adds guardrails by constraining how information mixes between layers so that signals stay balanced no matter how large the model gets. In other words, the model can go deeper without blowing up.

mHC improved reasoning scores by 16% on DeepSeek’s 27 billion parameter model, with similar gains across other benchmarks. The overhead is only 6-7%, which is negligible at scale.

DeepSeek published foundational training research ahead of R1, so analysts expect mHC will power their next flagship model R2. Given that Chinese New Year is around mid-February, don’t be surprised if R2 is announced after that.

“OpenAI’s community energy commitments are smart politics, but behind-the-meter generation has become table stakes for anyone serious about speed.”

for sure. it’s really been interesting to watch this space. great post.

Solid analysis on TSMC's monopoly problem. The comparison of capex grwoth (10%) vs revenue growth (50%) since 2022 really underlines how their lack of competition enabled cautious decisions. It's a textbook case of monoploy incentives being misaligned with industry needs. The 2-3 year fab timeline means this bottleneck probably shapes AI roadmaps until 2029 at least.