Nvidia Buys Groq, Google's Moat Widens, Open Source Stalls

Custom AI chips consolidate, OpenAI is being attacked on all fronts, and open source AI is losing mindshare

Nvidia Pays $20 Billion for Groq’s Inference Speed

Nvidia struck a $20 billion licensing deal with AI chip startup Groq on Christmas Eve, bringing founder Jonathan Ross and president Sunny Madra into Nvidia along with the company’s core IP. GroqCloud continues operating independently under new CEO Simon Edwards.

Groq framed it as a “non-exclusive licensing agreement,” while Nvidia CEO Jensen Huang’s internal email discussed plans to “integrate Groq’s low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads.”

This is Nvidia’s largest transaction ever, nearly triple the $7 billion Mellanox acquisition in 2019. And it signals something important: inference is disaggregating into two phases (prefill, which processes your prompt, and decode, which generates the response), and each phase has different optimal hardware.

The technical reasoning is clear. Unlike GPUs that fetch model weights from off-chip HBM memory, Groq’s LPUs store everything in fast on-chip SRAM (static RAM). This eliminates the memory bandwidth bottleneck that throttles inference speed. SRAM is more expensive and lower capacity than HBM, but dramatically faster for the decode phase of inference. Cerebras hits 2,600 tokens per second on Llama 4 Scout, while the fastest GPU solutions are 137 tokens per second.

Bank of America analyst Vivek Arya noted the deal “implies NVDA recognition that while GPU dominated AI training, the rapid shift towards inference could require more specialized chips.” He envisions future Nvidia systems where GPUs and LPUs coexist within the same rack, connected via NVLink.

Think of it as three variants of Rubin (Nvidia’s next-gen architecture after Blackwell, expected 2026) for three workload types. Rubin CPX is optimized for massive context windows during prefill with its high-capacity GDDR DRAM. Standard Rubin handles training and batched inference with HBM striking a balance between bandwidth and capacity. A Groq-derived “Rubin SRAM” would be optimized for ultra-low latency agentic reasoning inference.

This deal increases confidence that most ASICs except Google’s TPUs, Apple’s AI chips, and AWS Trainium will eventually be canceled. Good luck competing with three Rubin variants and Nvidia’s networking stack.

Intel is already moving this direction with its reported $1.6 billion SambaNova acquisition. Interestingly, Intel CEO Lip-Bu Tan is also SambaNova’s chairman. SambaNova’s RDU architecture was the weakest performer among the inference-optimized chip startups. Meta bought Rivos.

That leaves Cerebras in a highly strategic position as the last independent SRAM-based inference company. And Cerebras was ahead of Groq on all public benchmarks. The catch: Groq’s “many chip” rack architecture was much easier to integrate with Nvidia’s networking stack, while Cerebras’s wafer-scale engine almost has to be an independent rack.

OpenAI’s rumored ASIC may be surprisingly good (reportedly much better than the Meta and Microsoft ASICs), but the window for custom silicon is narrowing.

Google’s Distribution Moat Attacks OpenAI On All Fronts

Apple released the top free iPhone apps for 2025, and the results further show why Sam Altman called for a Code Red.

Google claimed five of the top 10 spots: Google Search (#3), YouTube (#7), Google Maps (#8), Gmail (#9), and Gemini (#10). Meta had three of the top 10.

OpenAI’s ChatGPT only has one app.

OpenAI had the biggest head start in AI, but they’re now being attacked on all fronts.

And instead of solving this distribution problem, OpenAI is distracting themselves with the enterprise, where Anthropic is already winning.

This distribution gap will only grow with the power of defaults. Gemini comes pre-installed on every Android device, will be native with iOS, and is integrated directly into Chrome’s 3.5 billion users. ChatGPT requires users to navigate to a website or download an app.

ChatGPT must increase their distribution, and do so while making more money from their consumers. Advertising is the obvious solution, but given Google’s headway, they may be too late.

Open Source AI Falls Further Behind

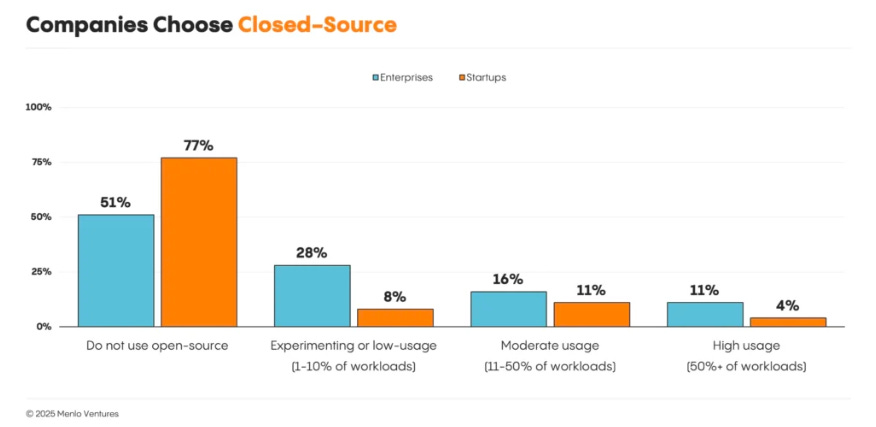

New data from Menlo Ventures shows open source losing ground faster than expected.

Production usage of open source models fell from 19% to 13% in the past six months. And the adoption numbers are worse than headlines suggest: 51% of enterprises and 77% of startups don’t use open source models at all. Only 11% of enterprises and 4% of startups run open source for more than half their AI workloads.

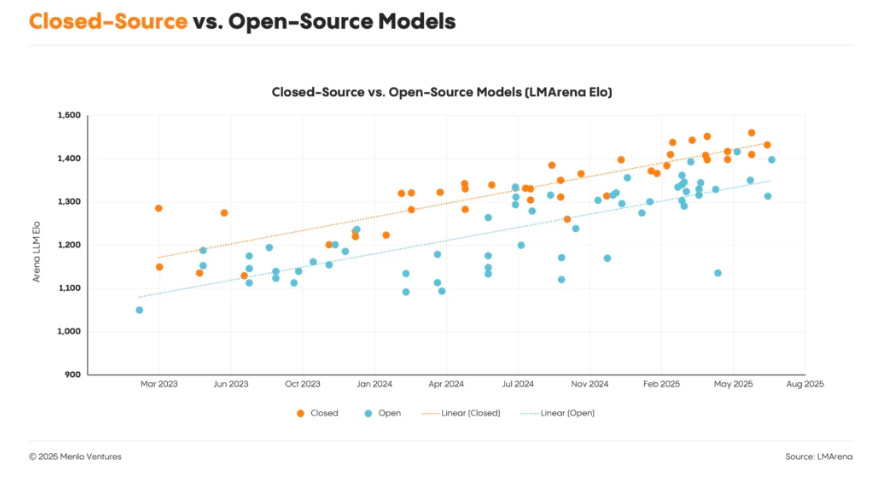

The performance gap isn’t closing either. LMArena Elo scores show closed-source models consistently outperforming open-source alternatives from March 2023 through August 2025. Both are improving on parallel tracks, but open source isn’t catching up.

The tooling layer tells a similar story. OpenAI’s $3 billion acquisition offer for Windsurf earlier this year signaled how valuable AI coding tools have become. GPT Engineer evolved into the commercial product Lovable. Continue’s “continuedev” gained traction through lean operations before major players entered the space.

The pattern is familiar: open source projects prove market demand, commercial interest follows, and the original community either gets acquired or out-resourced.

The Agentic AI Foundation, formed last week under the Linux Foundation with Anthropic, OpenAI, Google, Microsoft, and AWS as members, may accelerate this trend. The foundation will develop open standards for AI agents, but it also gives the largest players a seat at the table for defining what “open” means.