Google Won 2025, OpenAI Fumbled, Nvidia Consolidated: The 6 Stories That Defined AI in 2025

Google has the best model, the best distribution, and the best economics. OpenAI has 800 million users and a shrinking moat, and Nvidia's Blackwell concerns.

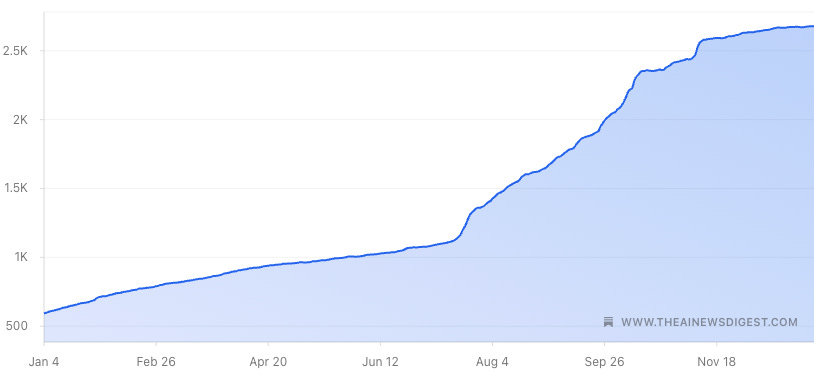

2025 has been an incredible year, and this chart shows why.

Subscriber growth increased 357% over the past 12 months. That growth is entirely thanks to you sharing this newsletter with others, and I don’t take it for granted. Thank you.

As for the AI industry itself, it’s wild looking back at everything that happened. Some of my predictions held up better than I expected. I couldn't find any freezing cold takes, but comment below if you can think of any

Here are the top stories that shaped AI in 2025, what’s happened since, and why it matters.

Nvidia & OpenAI Commoditize Each Other, OpenAI Overplays their Hand

This one’s my favorite strategic story of the year because it shows how quickly the AI value chain can flip.

In September, Nvidia announced a partnership with OpenAI. OpenAI would deploy at least 10 gigawatts of Nvidia products. Nvidia would invest up to $100 billion in stages.

Nvidia’s goal is commoditizing their complements, in this case LLMs. The OpenAI deal adds to prior deals done with xAI and Meta.

But do you want that as OpenAI? Of course not, and just two weeks later, OpenAI announced a 6 gigawatt deal with AMD. The funding structure was wild: in exchange for buying AMD’s GPUs, OpenAI got warrants for 10% of AMD at $0.01 per share. AMD was worth $343 billion. OpenAI was essentially given $34 billion of AMD stock for doing the deal.

AMD’s market cap increased $76 billion in two days. They made a 2x return on the equity they gave OpenAI.

But then we later learned that Sam Altman might have overplayed his hand, as that Nvidia deal was only a letter of intent! According to Nvidia’s 10-Q, “there is no assurance that we will enter into definitive agreements with respect to the OpenAI opportunity.”

Anthropic then announced a partnership with Nvidia and Microsoft instead, while OpenAI is still up in the air.

With that deal, Anthropic is now the only frontier model available across all three major clouds (AWS, Azure, GCP), and added Nvidia as a backer.

Gemini 3 Proves Google’s Vertical Integration, While Blackwell Keeps Breaking

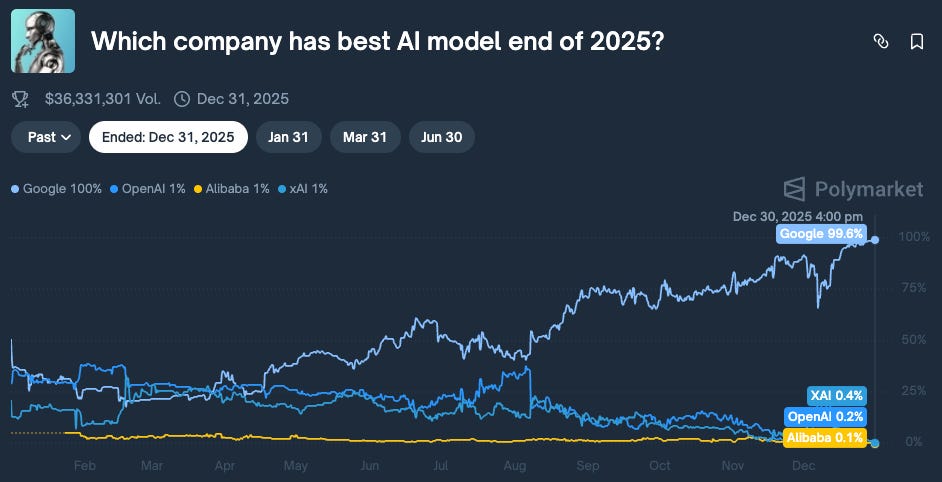

I wrote back in April about Google’s vertical integration. Back then, they were basically tied with OpenAI & xAI for having the best AI model by end of year.

And as you can see, by the end of the year, that vertical integration helped Google blow past the competition.

The big reason are their proprietary TPUs, which they use for training all of their AI models, including Gemini 3. These are cheaper to run than Nvidia’s GPUs, and more critically, not dependent on Nvidia.

But there’s another part of this story nobody is talking about: no frontier model has been primarily trained on Nvidia’s Blackwell chips yet.

Nvidia’s next-generation Blackwell GPUs have been plagued by overheating issues. The chips overheat when installed in high-capacity server racks with 72 processors, consuming up to 120kW per rack. Nvidia has reportedly asked suppliers to redesign the racks multiple times.

The problems got bad enough that major customers started cutting orders. Microsoft, Amazon, Google, and Meta all reportedly scaled back Blackwell orders due to the overheating issues and connection glitches between chips. OpenAI even asked Microsoft for older Hopper chips because of Blackwell delays.

AWS looks worse by comparison too. Google’s TPUs are producing frontier models. AWS announced Trainium2 with impressive specs but hasn’t announced a model this capable hosted on their infrastructure. This could change if AWS can get OpenAI training models on those chips.

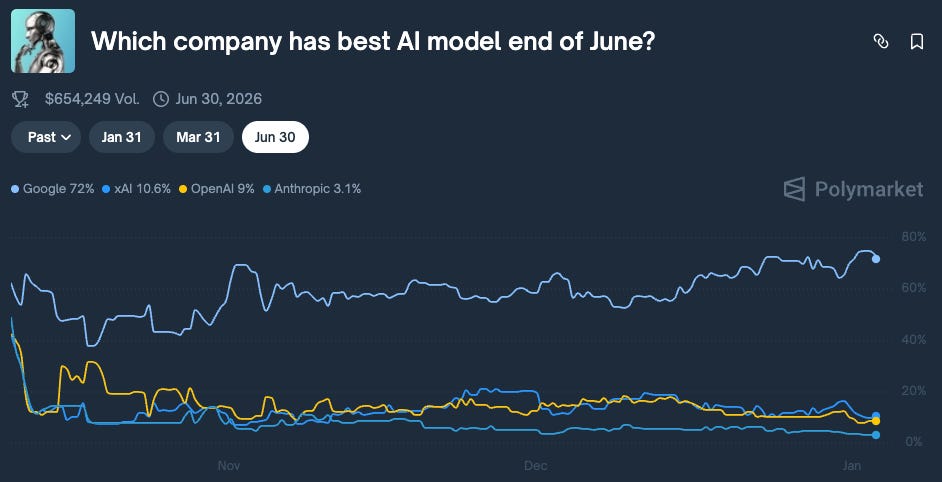

As for what the market thinks about the future. The furthest Polymarket looks is June 2026, and the market thinks that Google is running away with it.

The real question is if Google can overtake Anthropic in coding. If so, Anthropic could lose a ton of ARR practically overnight.

Google’s Power of Defaults Threatens OpenAI More Than Any Model

I’m pretty convinced this is what keeps Sam Altman up at night.

In October, I wrote about Judge Mehta’s ruling that allowed Google to keep paying for search defaults on Apple. I immediately assumed that if they can keep paying for search defaults, they will then pay for AI as well.

Google pays Apple over $20 billion per year so Google Search is the default on Safari. They learned what defaults mean the hard way, back in 2012 when they overplayed their hand on Maps and Apple released their own competing product.

Despite being one of the worst launches ever, and being memorialized in the HBO show Silicon Valley, Apple Maps still took meaningful share.

Google learned their lesson. They ramped up their patronage program and never lost default status again.

I asked the obvious question: what’s stopping Google from paying Apple to make Gemini the default AI on iOS?

And that’s exactly what happened. Granted, Apple is reportedly paying Google $1 billion per year, but this is practically free for a 1.2 trillion parameter model. OpenAI’s GPT-5 model cost between $1.25 billion and $2.5 billion for training alone.

Also, consider that Meta spent $18 billion on GPUs alone in 2024. Google is essentially giving Gemini away to keep Apple from building anything competitive.

It’s the search playbook all over again. They’re paying Apple to not compete, and this deal could keep Apple out of the biggest paradigm shift.

Now the question is, if you’re OpenAI and Gemini is the default for both iOS and Android, how do you compete in the consumer space?

OpenAI’s Consumer Distribution Moat is Collapsing

Sam Altman hyped GPT-5 with that Death Star post. Expectations were sky-high, especially after GPT-4.5 got deprecated just months after release.

GPT-5 showed solid improvement. But Polymarket odds for OpenAI having the best AI model by end of 2025 crashed from 40% pre-launch to 18%.

And the momentum kept falling from there. Sam Altman declared a “code red” after Gemini took more share, and competitors are attacking him on all fronts for distribution.

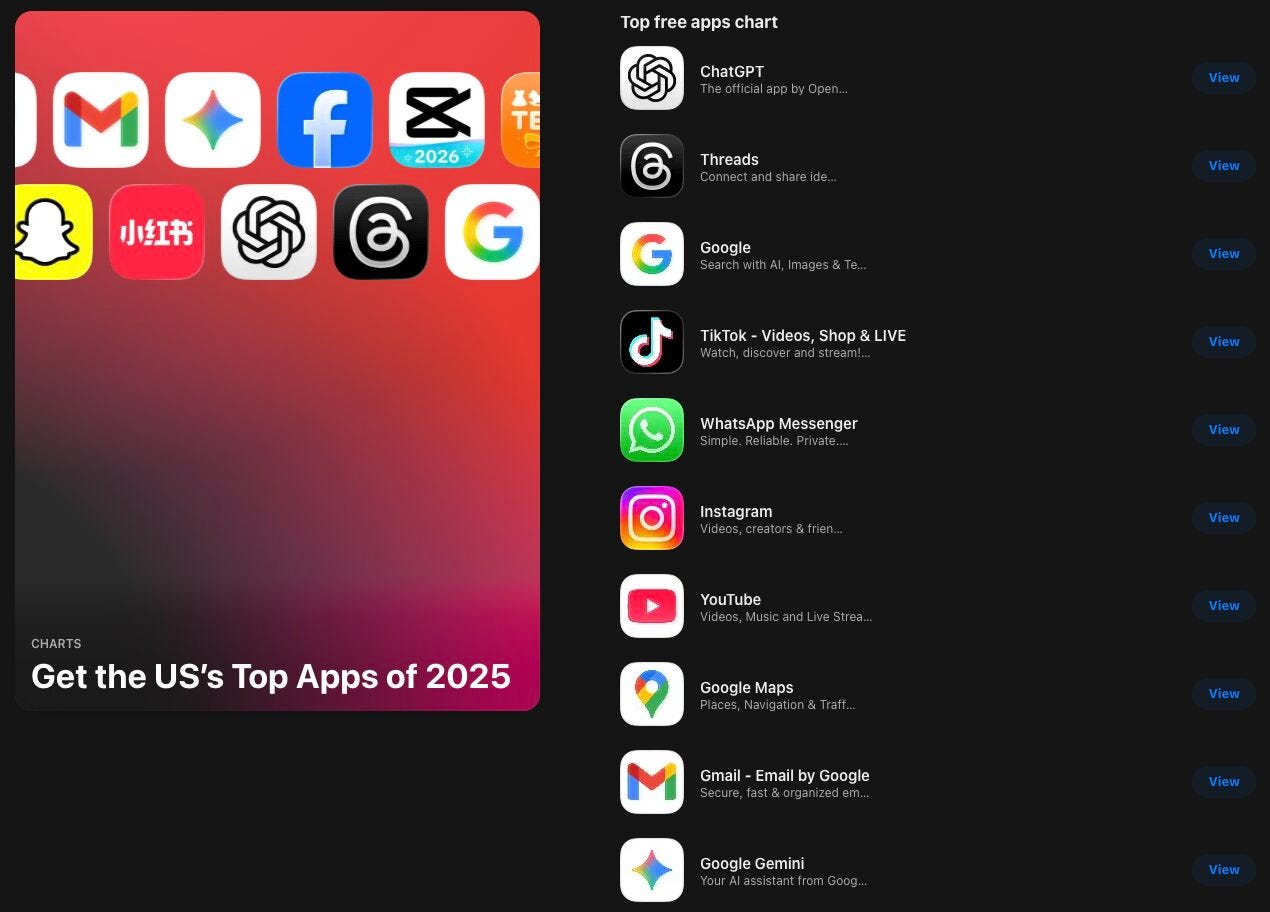

Google has 5 of the top 10 iOS apps, and is the default LLM for both Gemini and iOS. Sora was a nice fad for a couple months, but OpenAI’s only durable iOS app is ChatGPT.

GPT-5.1 launched three months later with no API and no benchmarks. First time in OpenAI’s history that a commercial model came out without either. It was probably rushed, to preempt Google’s Gemini 3 announcement.

ChatGPT has 800 million weekly active users, but their consumer dominance is waning, and Anthropic is rapidly capturing the enterprise.

Nvidia Paid $20 Billion for Groq, Putting the Remaining Inference Chips on Notice

Nvidia announced a $20 billion “licensing deal” with Groq on Christmas Eve. But seeing that 90% of Groq’s staff is moving to Nvidia, let’s call it an acquisition.

This deal matters because Groq’s LPUs were a real alternative to Nvidia’s GPUs for inference. They store everything in fast on-chip SRAM instead of fetching from HBM memory. The last independent SRAM-based inference company Cerebras hits 2,600 tokens per second on Llama 4 Scout, while the fastest GPU solutions hit 137.

I wrote after the acquisition that this “increases confidence that most ASICs except Google’s TPUs, Apple’s AI chips, and AWS Trainium will eventually be canceled.”

Nvidia just consolidated the inference market before it even fully formed. They now have a path to three Rubin variants for three workload types. Intel bought SambaNova. Meta bought Rivos. Cerebras is the last independent SRAM-based inference company left.

Yann LeCun Bet Against LLMs, and Lost the Room

The AI community treated this like a funeral.

Yann LeCun, Turing Award winner, inventor of deep learning fundamentals, chief AI scientist at Meta for 12 years, announced he was leaving to start a company focused on “world models.”

The narrative was brain drain. Only 3 of the 14 original Llama researchers remain at Meta. 600 AI layoffs hit FAIR directly. Analysts warned about “strategic disruption.”

But I argued his departure made complete sense for both sides.

LeCun spent years publicly arguing LLMs are a “dead end.” He testified to Congress that a cat understands the physical world better than the biggest LLMs. And the research keeps piling up in his favor. Apple showed frontier reasoning models suffer “complete accuracy collapse” beyond certain thresholds. Scaling returns are following exponential decay curves.

Sam Altman himself acknowledged GPT-5 has “saturated the chat use case.”

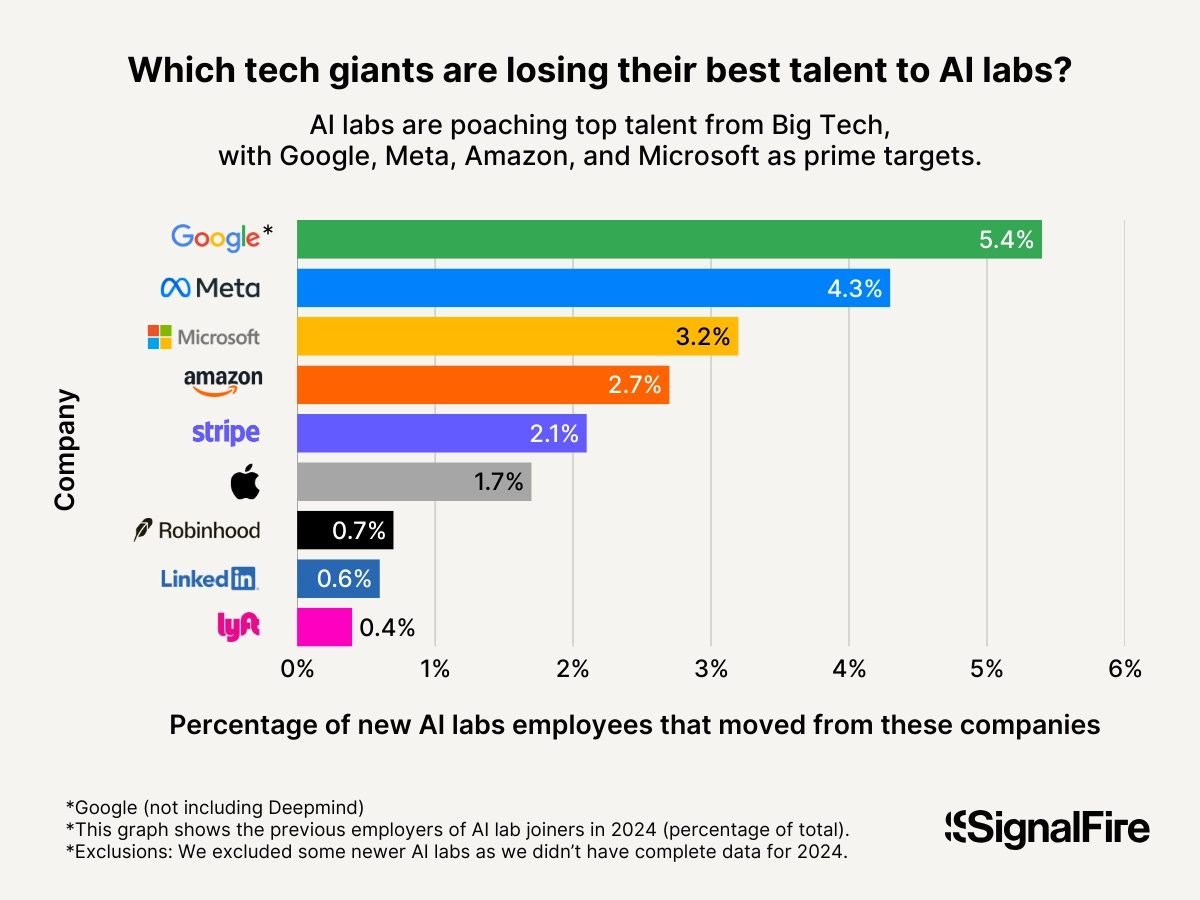

Meanwhile, Meta’s AI-powered advertising makes over $60 billion ARR. They can’t afford philosophical debates. Meta had the second-highest AI talent attrition rate despite offering multi-million dollar pay packages.

How do you recruit researchers when your chief scientist calls their work “a distraction, a dead end”?

LeCun might be right that world models are necessary for AGI. Meta might be right that LLMs can unlock trillions in ad revenue. Both things can be true. The godfather bet against LLMs. He lost the room. Now he gets to prove them wrong.

What’s Next?

Google won 2025. They have the best model, the best distribution, and the best infrastructure economics. Nvidia made a ton of money, but they must prove out Blackwell, and still no frontier model has been trained on it.

OpenAI still has 800 million weekly users. But that lead is shrinking while Anthropic takes the enterprise and Google takes the defaults.

The question for 2026: Can OpenAI find distribution before Google takes more share?

The Groq acquistion angle is underrated here. Nvidia essentially locked down the inference market before alternatives could scale, which means they control both training AND inference now. I worked with SRAM-based chips at a previous company and the latency advantages were huge, but this move by Nvidia means those won't commoditize GPUs anytime soon. The Blackwell overheating thing is embarassing but won't stop them from dominating the stack.

fully agree. google was behind in Jan and leader of the pack by end of the year. 10/10 from them. (and i say that as a huge anthropic fan)